Celebrity-Persona Companions: Consent, Verification, and Safer Use

Celebrity-themed AI companions sit at the crossroads of fandom, intimacy, and identity. When someone searches for a phrase like richelle ryan ai chat, the goal is usually straightforward: a conversation experience that feels flirtatious, responsive, and familiar.

The problem is that the market behind celebrity-persona chat is mixed. Some experiences are authorized and transparent.

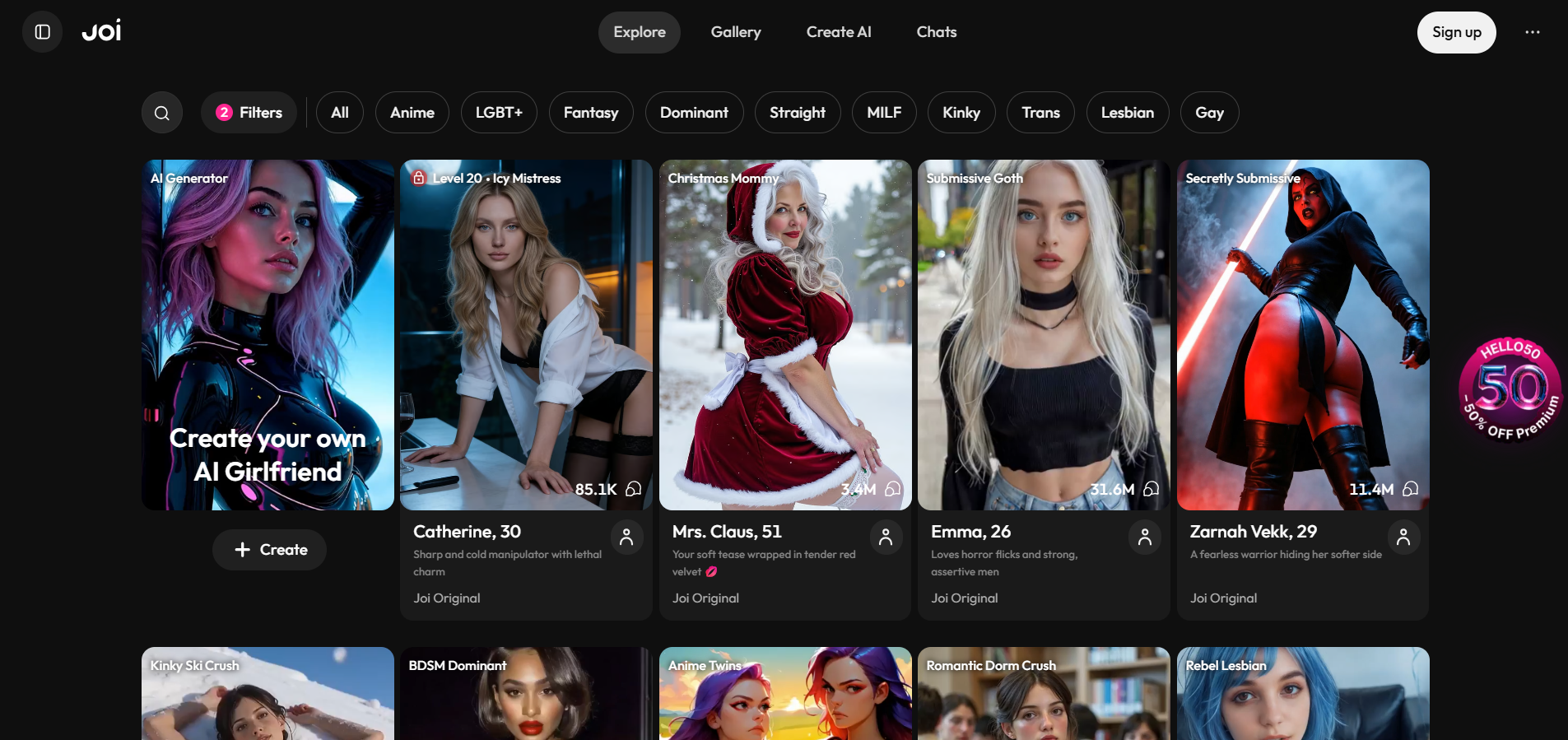

Others are “inspired” characters that clearly state they are fictional. And a significant portion is murky—built on implication, aggressive paywalls, and identity misuse.

This article is a practical consumer guide. It explains how these products typically work, what ethical lines matter (especially consent), how to spot high-risk funnels, and how to use digital companionship without letting it replace real-world connection or damage existing relationships.

1) Three categories that look similar but behave differently

A landing page can make many products look identical. The underlying category matters.

Authorized (licensed/partnered): The public figure (or rights holder) has agreed to the use of their name/likeness/branding, and the platform states it clearly. Expect visible terms, consistent branding, and support channels.

Fictionalized (clearly not affiliated): The persona borrows a general archetype (“confident performer,” “flirty streamer vibe”) but includes clear language that it is not the person and not officially connected. This can be ethically acceptable when it is honest and avoids misleading imagery.

Impersonation (non-consensual identity use): The product implies the real person is involved or uses their identity in a sexualized context without permission. This category overlaps heavily with scams, harassment ecosystems, and privacy risks.

The dividing line is not how “good” the chat feels. It is whether consent and transparency exist.

2) Why these chats become emotionally sticky fast

Celebrity-persona chat tends to feel “special” quickly because it combines three reinforcement mechanisms:

- Familiarity effect: recognizable cues lower social friction.

- Parasocial bonding: the brain treats repeated exposure as social closeness, even if the relationship is one-sided.

- Instant validation: rapid replies feel like availability, which the nervous system reads as safety.

These are normal human responses. The danger is when a product intentionally exploits them to push spending or to encourage secrecy.

3) A verification ladder that takes three minutes

Most users do not want to do research. A simple ladder reduces risk before time, data, or money is invested.

Step A: Transparency check

- Does the platform plainly state whether the persona is authorized or not?

- Is there a clear disclaimer if it is not affiliated?

- Are privacy and content rules written in plain language?

Step B: Accountability check

- Is there a visible support contact and a working help center?

- Are refund/subscription terms explicit?

- Can chat history be reset or deleted?

Step C: Transaction safety check

- Are payments handled through standard checkout methods?

- Is pricing stable (not changing mid-chat)?

- Is there pressure to pay off-platform or “verify” via unusual methods?

If Step A fails, treat the experience as low trust. If Step C fails, do not pay.

4) Red flags that often indicate a manipulative funnel or scam

Celebrity-persona intimacy products are magnets for scam operators because demand is concentrated and users may feel embarrassed to report. Common red flags include:

- claims like “this is the real person” without clear authorization statements

- urgent exclusivity (“only you,” “VIP now,” “respond immediately”) within minutes

- endless “unlock ladders” that keep moving the goalposts

- requests to switch to another app for “private” payments

- gift-card/crypto/off-platform payment requests

- vague “age verification” that asks for credit card details

- broken or missing policies, no clear refund terms

A helpful rule: romance does not need urgency to exist; scams do.

5) Privacy rules for high-sensitivity chat

Chat feels private. It should be treated as not-private. Keep personal risk low by avoiding:

- legal name, address, workplace, daily schedule

- personal photos that include face, tattoos, unique backgrounds

- financial details beyond normal checkout

- information about other people who did not consent

A simple boundary: if it would be uncomfortable to read aloud in a café, it does not belong in a chatbot conversation.

6) A table for evaluating the product in one glance

Use this table before subscribing or sharing any identifying details.

| Area | Lower-risk signal | Higher-risk signal | Practical move |

| Authorization | clearly states licensed or clearly not affiliated | implies “real person” without proof | don’t pay |

| Pricing | simple plan, stable terms | shifting paywalls, constant upsells | set a cap, exit early |

| Privacy | clear retention + deletion options | no policy or vague language | share less, avoid uploads |

| Support | real contact + refunds | missing help or evasive replies | don’t subscribe |

| Content safety | boundaries + reporting | “anything goes” pitch | keep use minimal |

7) Emotional boundaries: the Replace vs Support test

Even a legitimate product can become unhealthy if it replaces real life. Track one number for a week:

Rate 0–10:

- Before: willingness to contact friends, go outside, plan a date, or do a hobby

- After: willingness again

If motivation repeatedly drops after use, it is a replacement pattern. Replacement patterns often show up with these behaviors: missed sleep, canceled plans, lowered interest in real dating, and rising spending to “chase novelty.”

8) A realistic case vignette (and the clean exit)

A typical arc looks like this: the chat begins with flattering messages, then quickly introduces a paywall promising “more personal” access.

After one payment, the experience pushes a higher tier for “exclusive replies,” then another tier for “custom attention.” The user continues paying to make the first purchase feel justified.

A clean exit strategy:

- decide a monthly budget before the first session

- use a timer for every session

- set an exit condition (“if pricing shifts mid-chat, I stop”)

- never pay off-platform

This is pre-commitment. It protects against spur-of-the-moment decisions that feel good for five minutes and bad for five days.

9) Relationship context: transparency matters more than technology

In committed relationships, the ethical issue is usually not the existence of a chatbot. It is secrecy and agreement. Couples differ in what they consider acceptable (some treat adult chat like erotica; others treat it as a boundary violation).

The practical rule is simple: if it would feel dishonest to describe the behavior fully, the boundary is not clear.

A stable agreement tends to include:

- time limits

- spending limits

- content limits (what is okay, what is not)

- protection of real intimacy routines (date night, honest check-ins)

10) Valentine’s week: reduce vulnerability with a layered plan

Valentine’s week increases comparison and boredom. The healthiest pattern is to place digital companionship after real-world connection, not before it:

- one human touch (voice note, call, coffee plan)

- one public activity (cinema, café, walk, class)

- one comfort ritual (meal, music, tidy space)

- optional: time-boxed digital chat

When real belonging is in place first, a chat tool stays entertainment. When real belonging is absent, the tool is more likely to become a nightly coping mechanism.

Celebrity-persona chat can be harmless fun when consent and transparency are present, and when user boundaries are firm.

The product should never require identity oversharing, secrecy, or escalating payments to feel meaningful. Treat it like any high-stimulation media: optional, contained, and balanced with real life.